Cross Validated Issue #3: The Singularity: Is AI Approaching the Point of No Return? 🤖

The Rise of AGI and What It Means for Us

Welcome back! It's been an eventful week, and you may have heard about the unfolding drama at OpenAI. While there's no shortage of coverage and opinion on that topic online, let’s turn our focus to technological singularity—a concept that may well redefine our future!

Table of Contents

The Singularity: Is AI Approaching the Point of No Return?

Article Spotlight: Selecting Features with Shapley Values

AI Recap: Essential News You Might Have Missed

The Singularity: Is AI Approaching the Point of No Return? 🤖

The Rise of AGI and What It Means for Us

What is The Singularity?

Originally a mathematical concept, the singularity is a theoretical point in time where we are unable to decipher the mathematical properties of a given object. This would occur where we lacked the concept of a tangent in the case of a curve, or where we lacked the concept of infinity in the case of a function.

Technological singularity implies an event horizon beyond which our current understanding cannot predict or control the outcomes of superintelligent machines' actions

In plain English, this means that the invention of an artificial superintelligence will abruptly trigger runaway self-improvement that eventually surpasses all human intelligence. The robots would do it all and there would be no need to work!

Tracing the Roots of Technological Singularity

John von Neumann, a 20th-century Hungarian-American mathematician, first used the word “singularity” to describe this concept. He suggested that a rapid acceleration of technological progress would fundamentally alter human life.

Science fiction writer Vernor Vinge was the one who brought "the singularity" into the mainstream in 1983 through his op-ed in Omni Magazine. According to Vernor:

When this happens, human history will have reached a kind of singularity, an intellectual transition as impenetrable as the knotted space-time at the centre of a black hole, and the world will pass far beyond our understanding.

This singularity, I believe, already haunts a number of science-fiction writers. It makes realistic extrapolations to an interstellar future impossible.

Scary, isn’t it? Even prominent technologists such as Elon Musk have expressed their concerns about the rise of artificial intelligence and the occurrence of the singularity. To quote Musk’s tweet:

The irony is that entrepreneurs such as Musk, an original co-founder of OpenAI who has since left the organization, are major drivers behind the rapid advancement of artificial intelligence systems like ChatGPT.

Musk's latest venture Neuralink is developing human-brain interfaces, which may eventually be driven by advanced AI—an exciting yet potentially dangerous combo.

What’s the Timeline?

So where are we on the timeline toward the singularity?

This is obviously not an easy question to answer. However, we are already seeing rapid advancements in artificial intelligence, which serves as the basis of the singularity event.

However, it is worth noting that we are still far from achieving artificial general intelligence (AGI).

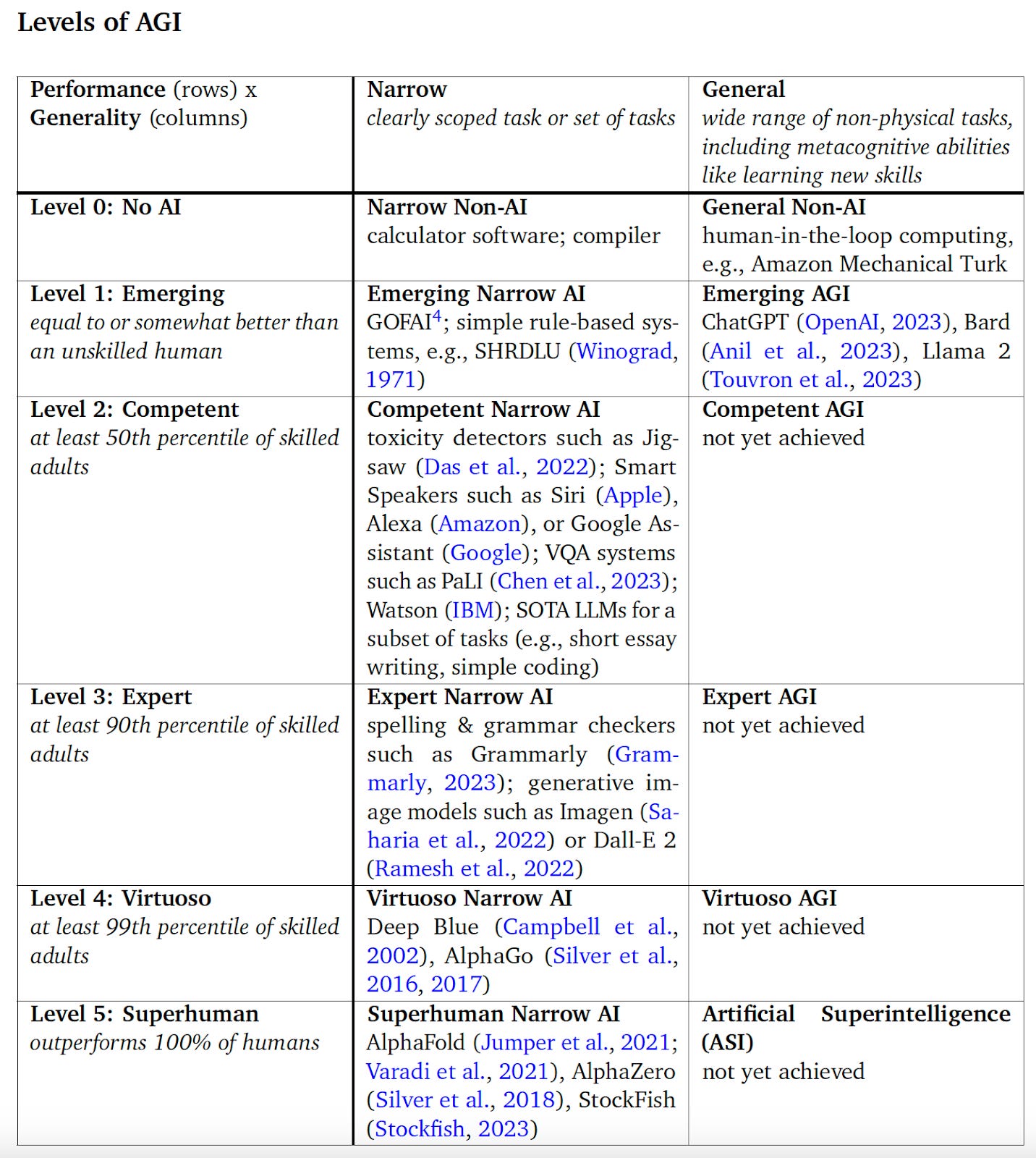

According to DeepMind’s latest paper, we are only at level 1 of DeepMind’s AGI framework. Even though ChatGPT is seemingly capable of doing everything, it only qualifies as an emerging AGI.

If you read our newsletter last week, we also mentioned Google’s latest research, which highlights that models such as GPT cannot generalize beyond their training data.

Therefore, we are still at the nascent stages of AGI.

It is interesting to consider, given the rapid advancements in AI-specific hardware and software, whether reaching AGI or even Artificial Superintelligence (ASI) is achievable within the next twenty to thirty years.

When do you think we’ll reach the singularity? And when we do, how will you occupy your time? 🤪

Article Spotlight 🔦:

Selecting Features with Shapley Values (Renato Boemer)

This article by author Renato Boemer introduces Shapley Values, a tool for ML practitioners selecting feature values that are important to ML models. Read the article here. Find Renato on Medium.

AI Recap: Essential News You Might Have Missed 📢

OpenAI's Ethical Crossroads Amidst Leadership Turmoil

OpenAI's ChatGPT has skyrocketed in popularity, yet the company faces a tumultuous ethical debate on the pace of AGI development, leading to CEO Sam Altman's brief ouster and swift reinstatement. This leadership rollercoaster reflects the industry's struggle to balance rapid innovation with responsible AI governance. The saga has heightened tensions within the company, with employees openly challenging the board's direction and considering mass resignations.

Read more: The OpenAI meltdown will only accelerate the artificial intelligence race (The Guardian)

Amazon Launches 'AI Ready' Training Initiative

Amazon announces the 'AI Ready' commitment to offer free AI skills training to 2 million people by 2025, launching new courses and scholarships. Aimed at addressing the AI talent gap, this initiative includes free courses, a partnership with Code.org, and scholarships for high school and university students, underscoring the increasing importance of AI skills in the workforce.

Read more: Amazon aims to provide free AI skills training to 2 million people by 2025 with its new ‘AI Ready’ commitment (Amazon)

Kyutai: France's Open Science Crusade in AI

Kyutai, a Paris-based AI research lab founded by French billionaire Xavier Niel with a hefty budget of $330 million, commits to advancing artificial general intelligence as an open-source venture. This non-profit lab aims to work transparently, publishing research and providing resources to the wider community, challenging the more closed-off practices of big tech companies. This initiative marks France's strategic move towards fostering innovation over regulation in the AI sector and makes open-source AI a kind of national asset.

Read more: Kyutai is a French AI research lab with a $330 million budget that will make everything open source (TechCrunch)

Anthropic Unveils Upgraded Claude 2.1

Anthropic's release of Claude 2.1 boasts a substantial increase in the context window to 200,000 tokens, enabling it to analyze extensive texts, and an upgrade in accuracy to reduce errors. The model now also features the ability to utilize external tools and APIs for enhanced problem-solving, showcasing significant strides in AI development amidst the industry's rapid evolution.

Read more: Anthropic’s Claude 2.1 release shows the competition isn’t rubbernecking the OpenAI disaster (TechCrunch)

Dutch AI Initiative Aims for Ethical Transparency

The Netherlands is creating GPT-NL, its own transparent and verifiable large language model (LLM), as a response to the ethical opaqueness of AI development by major tech companies. Financed by a €13.5 million government grant, this open model will allow public insight into its algorithms and data sources, embodying Dutch values in its design.

Read more: Netherlands building own version of ChatGPT amid quest for safer AI (TNW)